CNNs Explainability, Bias Determination, and Style Transfer

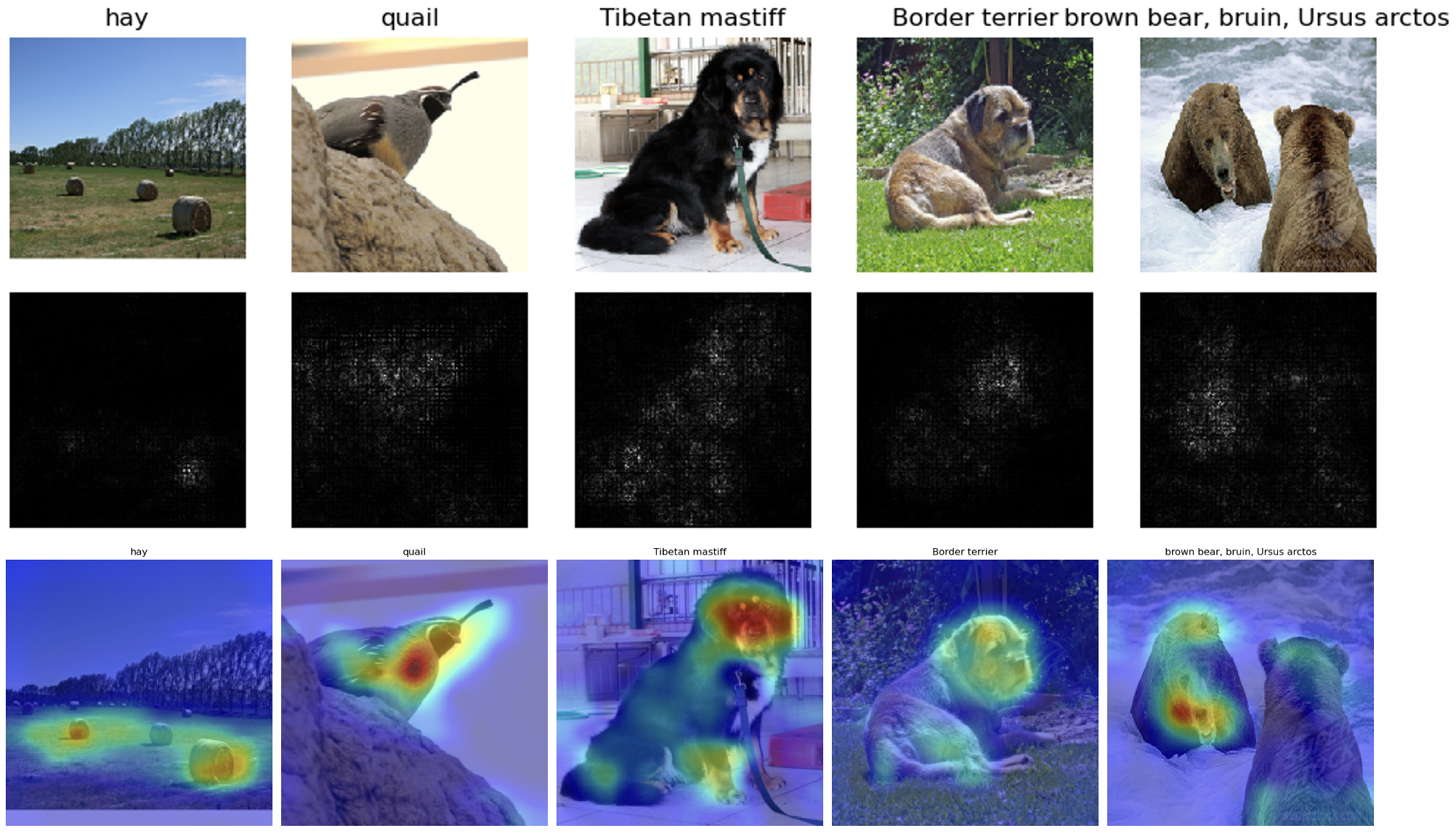

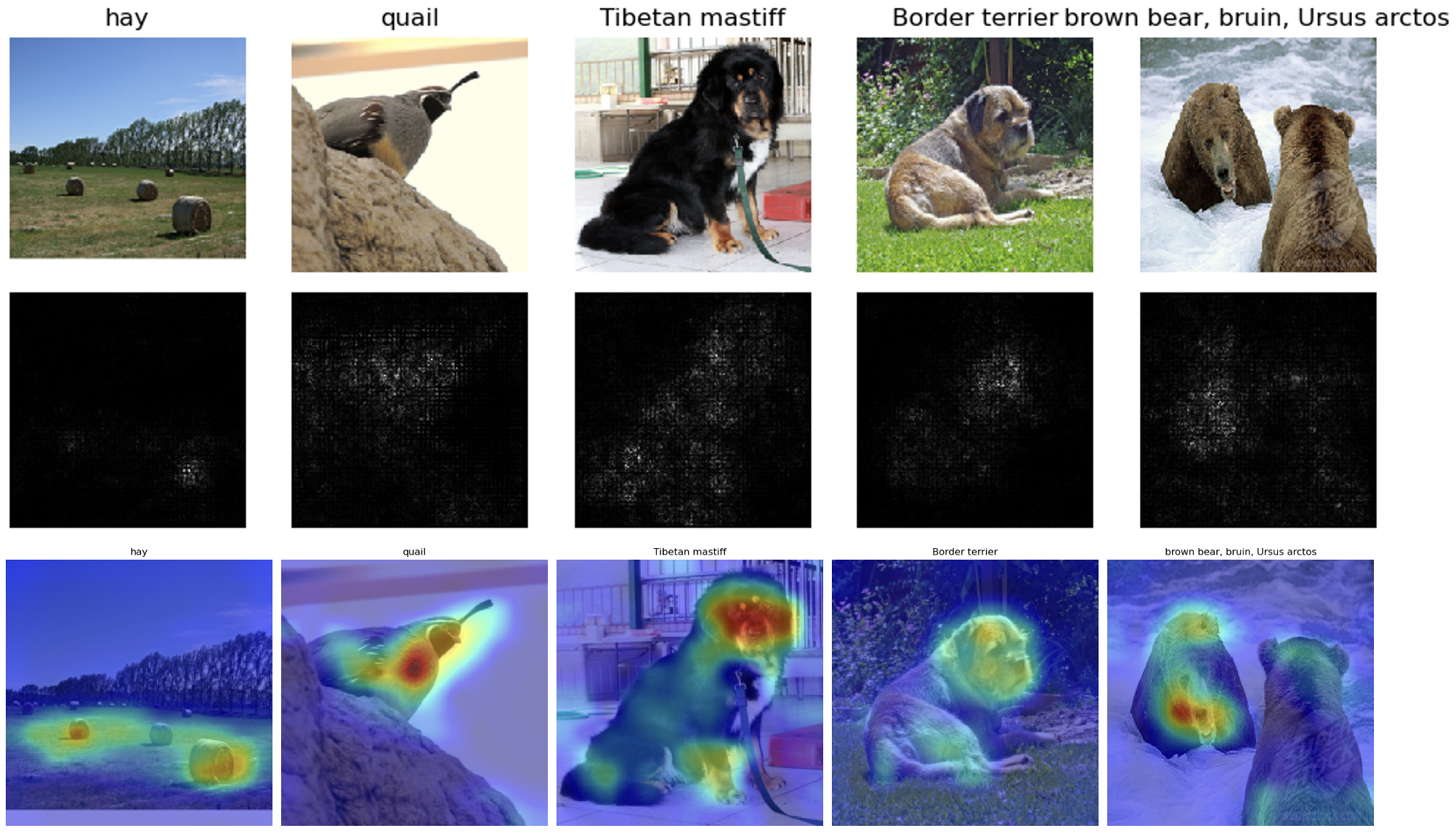

- Developed explainability methods (class model visualization, class saliency map, Grad-CAM, guided Grad-CAM, and Captum layers) on top of a SqueezeNet model (on ImageNet dataset) to determine network bias [Python, PyTorch]

- Evaluated the SqueezeNet model stability by creating fooling images (adversarial examples) [Python, PyTorch]

- Built style transfer by stringing different loss functions together using the SqueezeNet model for creating artistic images