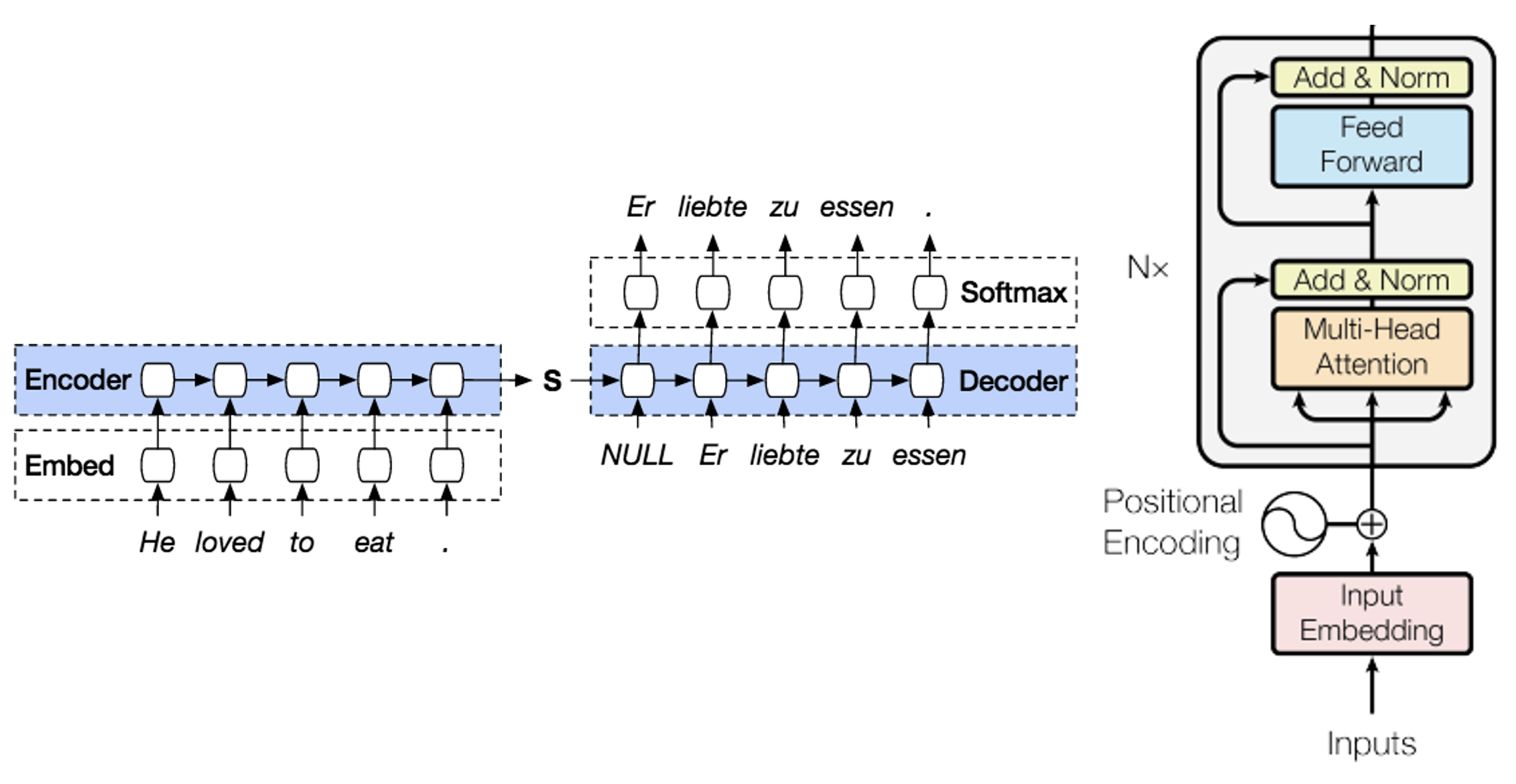

Machine Translation using Seq2Seq, and Transformer models for Multi30K dataset

Conducted token encoding and sequence padding using TorchText, and spaCy

Trained a Seq2Seq model (using RNNs, and LSTMs), and a Transformer encoder from scratch for translation [Python, PyTorch]